Final chapter. End of the road. I am refunding my own mental time to focus on another project. Not because writing down my thoughts hasn’t been a useful exercise. In fact, it has been immeasurably valuable to me. Provided some mental clarity, allowed me to think through my shifting world view and reestablish myself in a world I found to be chaotic and unpredictable where once it was stable and concrete. Albeit without any particular direction other than ‘onwards’.

To be clear, I still don’t know what direction we go, I can only be the master over my own path. You make your own destiny, and in the words of Epictetus:

“Freedom is the only worthy goal in life. It is won by disregarding things that lie beyond our control.“

Peak Probability

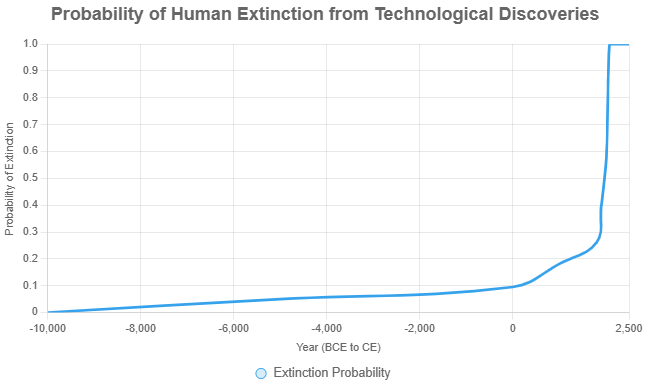

“Consider yourself lucky to be alive” might be closer to the bone than most people think. What is the probability that humans have managed to survive as long as we have and not metaphorically blow ourselves up in the process? There are several conceptual frameworks that we can use to explore this, one of them being the Vulnerable World Hypothesis proposed by philosopher Nick Bostrom. This framework likens technological progress to drawing balls from an urn or a box, where white balls represent beneficial or neutral technologies, and a black ball represents a technology that could cause human extinction.

The hypothesis suggests that the longer humanity draws from the urn (i.e., develops new technologies), the higher the chance of encountering a black ball, especially as technological advancement accelerates. If we consider that humanity has made approximately 10,000 technological discoveries since the agricultural revolution, roughly in line with the global population explosion, the probability of encountering a metaphorical black ball in the next century could range from 1% to 90%. This is dependent on the rate of innovation and the proportion of dangerous technologies.

An article on lesswrong.com posited that by applying Bayesian reasoning that the emergence of ASI (Artificial Super Intelligence) represents an existential threat to humanity, akin to chimpanzees attempting to control the behavior of humans. This article applies a 99.98% chance of extinction. The development of ASI could lead to an explosion of technological progress, new discoveries, dangerous technologies. Technology by its very nature is exponential and so the chances of us developing dangerous technology accelerates over time. Indeed, ASI represents a “Schrödinger’s Ball” with a high likelihood of being black due to alignment challenges, but we won’t know until it happens.

Warp speed, Mr. Sulu.

I want to take this one step further, or darker, if you will. Nick’s framework establishes the probability of us creating an unmanageable technology. Bayesian reasoning applied to the emergence of ASI shows that this possibility will likely accelerate due to the inevitable exponential nature of technology. The advancement of science also accelerates with the number of human beings that are alive. Approximately 0.0032% of the global population, or about 262,400 people, have an IQ of 160 or higher, corresponding to an “Einstein-level” IQ based on the most common estimate. This is derived from the normal distribution of IQ scores and a global population of 8.2 billion. If we continue to grow the human population, perhaps in an off world scenario, to say, 1 trillion, how many Einstein’s will we have? 32 million.

Let’s consider the following:

- Timeline: From 10,000 BCE (agricultural revolution) to ~2500 CE (1 trillion population).

- Existential Risk: 0.01% (1 in 10,000) chance per technological discovery of a “black ball” causing extinction

- Discoveries: ~10,000 significant technological discoveries from 10,000 BCE to 2025, with an exponential increase post-2025 due to AI/ASI (e.g., doubling every 10 years).

- Population Growth: From ~5 million in 10,000 BCE to 8.2 billion in 2025, reaching 1 trillion by ~2500, assuming technological advancements like interstellar colonization.

- Einstein-Level IQ: 0.0032% of the population (1 in 31,250) has an IQ ≥ 160, scaling from ~160 individuals in 10,000 BCE to ~32 million at 1 trillion.

We arrive at the following sigmoid shaped curve.

Probability Model: Cumulative extinction probability after n discoveries P(extinction) = 1 − (1 − 0.0001)n.

If we are currently in the parabolic curve phase of exponential technological innovation, fueled by AI (ASI tbc) and ever more people in the timeline, the chances of us having peaked, would surely be closer every day?

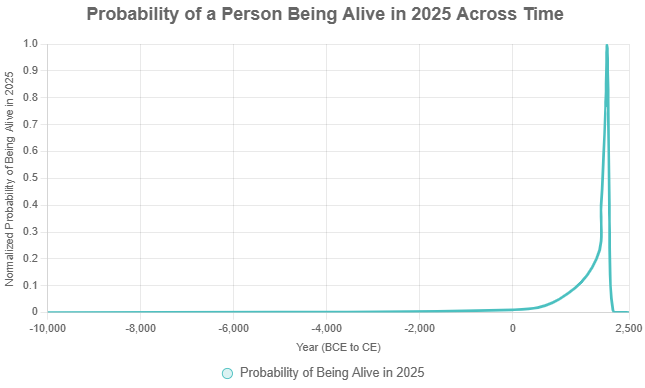

Peak Public Performance

Not that the above chart isn’t terrifying enough, but where do you, the reader, fit into the probability curve? What are the chances that you, specifically you, are alive today? Now we can get into the Doomsday Argument, a probabilistic framework that considers an individual’s position among all humans who will ever live to infer the likelihood of being alive at a particular moment.

If N is the total number of humans (past, present, and future), and you are the n-th human born (your birth rank), the probability of being alive at a specific time depends on your rank relative to N. Given that ~100 billion humans have lived by 2025 your birth rank n ≈ 100 billion. The probability of being the n-th human, assuming a uniform distribution over N, is approximately 1/N, but the Doomsday Argument uses Bayesian reasoning to infer the likelihood of being alive now versus other times, suggesting that N is unlikely to be vastly larger than n, as this would make your current position improbably early.

- Timeline: From 10,000 BCE (agricultural revolution) to ~2500 CE (1 trillion population).

- Population Growth:

- 10,000 BCE: ~5 million.

- 1800: 1 billion.

- 2025: 8.2 billion.

- Future: 100 billion by 2100, 500 billion by 2200, 1 trillion by 2500 (assuming exponential growth via technological advancements like interstellar colonization).

- Cumulative Births: ~100 billion humans by 2025, increasing to ~1 trillion by 2500.

- Einstein-Level IQ: 0.0032% of the population (IQ ≥ 160), scaling from 160 in 10,000 BCE to 32 million at 1 trillion, contributing to technological acceleration.

- Technological Discoveries: ~10,000 discoveries by 2025, with an exponential increase post-2025 (doubling every 10 years due to AI/ASI), influencing population growth and survival risks.

- Probability Model: The probability of being alive in 2025 is modeled as the proportion of humans alive in 2025 relative to the total N, adjusted for survival probabilities from extinction risks (0.01% per discovery, as in the VWH).

Conclusion

Carpe diem.

Be the pond sage, unbothered, moisturised, happy in your lane. Flourish.

You’re lucky to be alive right now.